1. AI Capability of ThingIQ

2. Data Acquisition and Signal Preprocessing

2.1. Vibration Sensor Overview

2.2. Signal sampling

2.3. FFT – Based Feature Extraction

3. AI Model Architecture

3.1. Model Tensor Description

3.2. Training

4. Deployment on MAX78000

5. Conclusion

1. Al Capability of ThingIQ

In modern industrial IoT systems, sensor data—particularly vibration data—plays a critical role in assessing equipment health. However, traditional monitoring approaches based on fixed thresholds are often inadequate in complex operating environments, where load conditions, rotational speeds, and environmental factors continuously vary.

ThingIQ leverages Artificial Intelligence (AI) as a core intelligence layer within its IoT platform, enabling early anomaly detection and predictive maintenance directly at the edge device, with low latency and high energy efficiency. Building on this foundation, ThingIQ adopts an AI-first approach, in which machine learning models are embedded directly into the IoT data processing pipeline. Rather than merely collecting and visualizing sensor data, the platform focuses on analysis, learning, and inference from real-time sensor streams.

This approach is particularly well suited for large-scale industrial and IoT systems, where operational requirements include:

- Early anomaly detection;

- Low-latency response;

- Reduced reliance on cloud-based inference;

- Optimized energy consumption.

To enable reliable and data-driven intelligence, the effectiveness of AI models fundamentally depends on the quality and structure of input data. In industrial environments, raw sensor signals are often noisy, non-stationary, and highly dependent on operating conditions. Therefore, a robust data acquisition and signal preprocessing pipeline is a critical prerequisite for meaningful learning and inference. The following section describes how vibration signals are acquired and transformed through signal preprocessing techniques to extract informative representations for subsequent AI-based analysis.

2. Data Acquisition and Signal Preprocessing

One of ThingIQ’s key AI capabilities is vibration-based anomaly detection, built upon a signal processing and deep learning pipeline designed for industrial IoT environments. Vibration data is acquired in real time from accelerometer sensors and transformed into the frequency domain using the Fast Fourier Transform (FFT). Frequency-domain analysis highlights characteristic vibration patterns associated with normal operating conditions of industrial equipment.

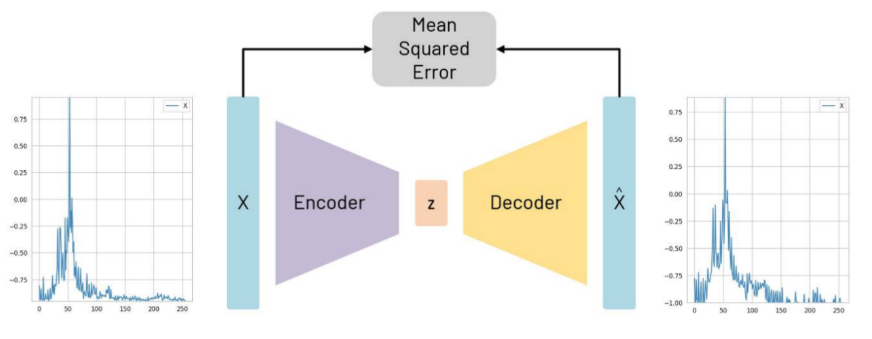

After FFT processing and normalization, the data is fed into a Convolutional Neural Network (CNN) Autoencoder, where the model learns to reconstruct normal vibration patterns. The reconstruction error is then used as a quantitative metric to detect operational anomalies.

This approach enables the system to:

- Detect subtle deviations before physical thresholds are exceeded

- Adapt to changing operating conditions

- Reduce false alarms compared to traditional rule-based methods

2.1. Vibration Sensor Overview

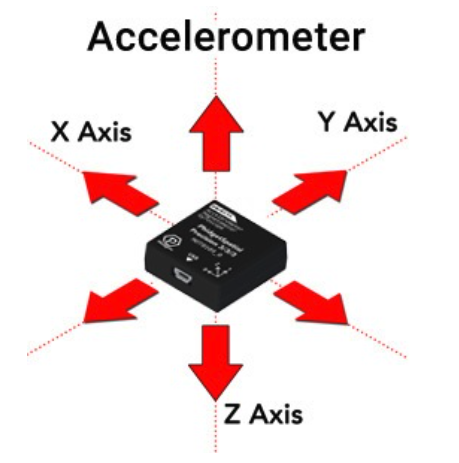

The vibration data is collected using a 3-axis MEMS accelerometer mounted directly on the mechanical structure under monitoring

The sensor measures linear acceleration along three orthogonal axes:

- X-axis: Typically aligned with the horizontal direction

- Y-axis: Typically aligned with the vertical direction

- Z-axis: Typically aligned with the axial or radial direction of the rotating shaft

Figure 1: Accelerometers Measure Acceleration in 3 Axe

2.2. Signal sampling

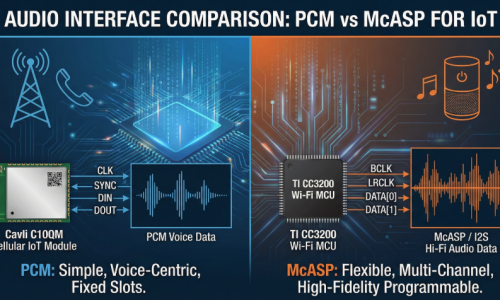

Each axis of the accelerometer produces an analog vibration signal, which is digitized using the MAX78000 ADC.

Typical sampling parameters:

- Sampling frequency (Fs): 20 kHz – 50 kHz

- ADC resolution: 12 bits

- Anti-aliasing filter: Analog low-pass filter applied before the ADC input

The sampling frequency is selected according to the Nyquist rule to ensure that all fault-related vibration frequencies are captured without aliasing.

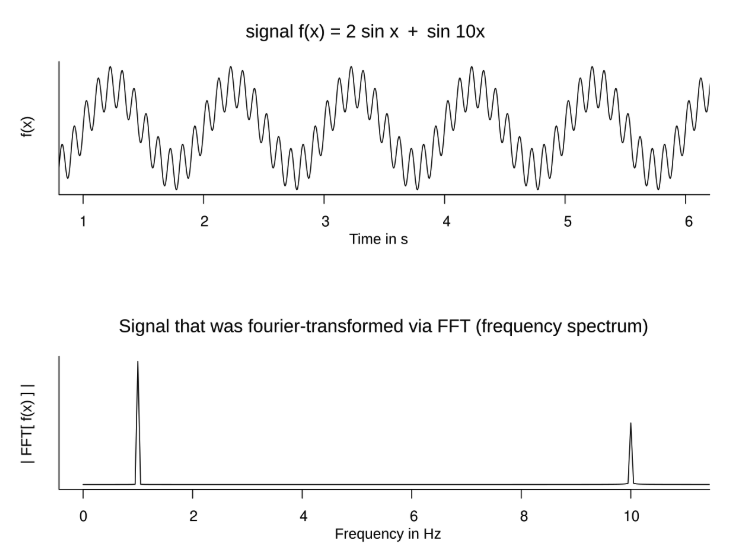

2.3. FFT – Based Feature Extraction

After sampling, the vibration signals from each axis (X, Y, and Z) are transformed from the time domain into the frequency domain using the FFT. Each axis is processed independently.

The FFT converts raw vibration signals into frequency bins, where mechanical faults typically appear as distinct spectral components. Only the magnitude of the FFT is used, as phase information is not required for vibration-based fault detection.

This frequency-domain representation provides compact and informative features that are well suited for predictive maintenance and subsequent AI-based analysis.

3. AI Model Architecture

3.1. Model Tensor Description

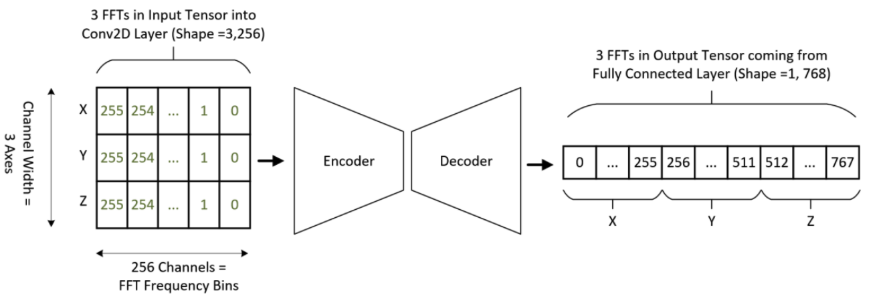

Technical Description of Model Input and Output:

- Model Input Shape: [256, 3] (representing [Signal_Length, N_Channels]).

- Input Details: The input consists of 3 axes (X, Y, and Z), where each axis contains 256 frequency bins (FFT data points).

- Model Output Shape: [768, 1].

- Output Details: The final layer is a Linear layer that produces a flattened output of 768 features (calculated as 256 3).

Figure 2: Autoencoder structure, with reconstruction of an example X axis FFT shown

3.2. Training

Figure 3: Input and output tensor shapes and expected location of data

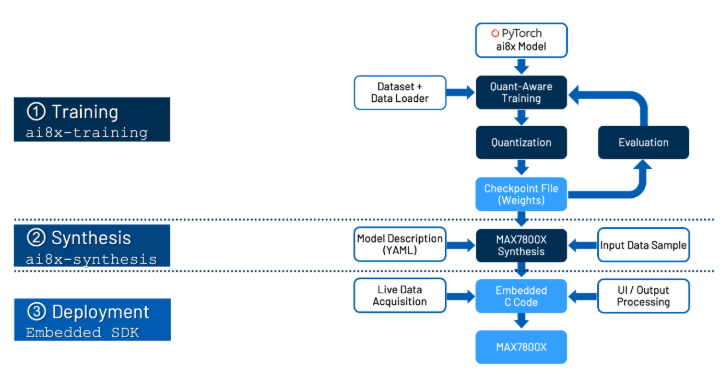

In general, a model is first created on a host PC using conventional toolsets like PyTorch and TensorFlow. This model requires training data that must be saved by the targeted device and transferred to the PC. One subsection of the input becomes the training set and is specifically used for training the model. A further subsection becomes a validation set, which is used to observe how the loss function (a measure of the performance of the network) changes during training.

Depending on the type of model used, different types and amounts of data may be required. If you are looking to characterize specific motor faults, the model you are training will require labeled data outlining the vibrations present when the different faults are present in addition to healthy vibration data where no fault is present.

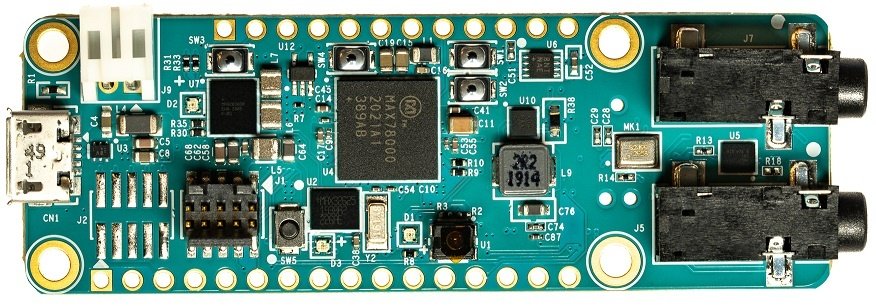

As a result of this process, three files are generated:

- cnn.h and cnn.c

These two files contain the CNN configuration, register writes, and helper functions required to initialize and run the model on the MAX78000 CNN accelerator. - weights.h

This file contains the trained and quantized neural network weights.

4. Deployment on MAX78000

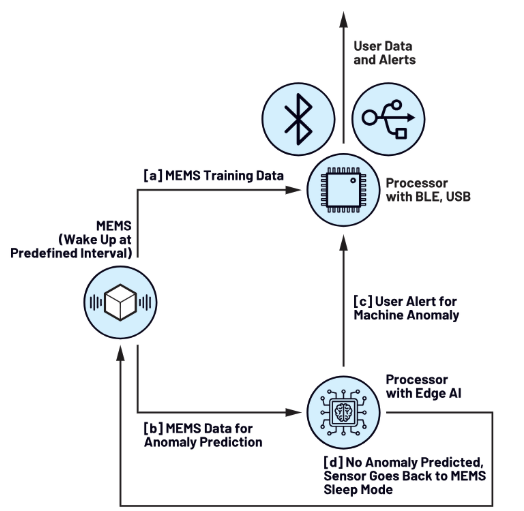

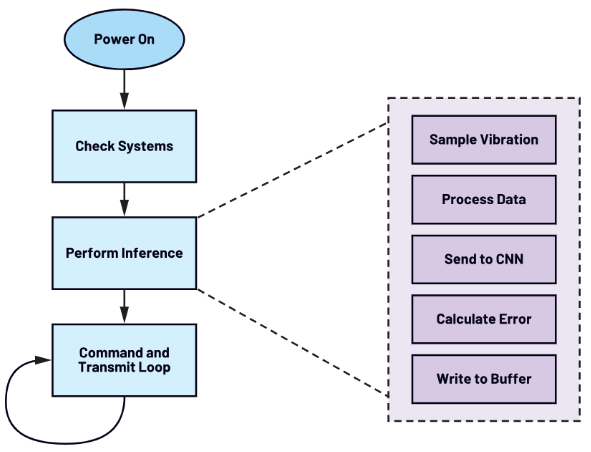

Figure 5: mode of operation

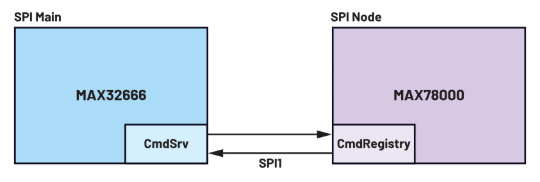

Once the new firmware is deployed, the AI microcontroller operates as a finite state machine, accepting and reacting to commands from the BLE controller over SPI.

When an inference is requested, the AI microcontroller wakes and requests data from the accelerometer. Importantly, it then performs the same preprocessing steps to the time series data as used in the training. Finally, the output of this preprocessing is fed to the deployed neural network, which can report a classification.

Figure 6: AI inference state machine

5. Conclusion

By integrating advanced signal processing techniques with deep learning, ThingIQ provides a robust and scalable approach to vibration-based anomaly detection for industrial IoT systems. The combination of FFT-based feature extraction, CNN autoencoder modeling, Quantization Aware Training, and edge AI deployment enables early detection of abnormal behavior with low latency and high energy efficiency.

This architecture allows enterprises to move beyond reactive, threshold-based monitoring toward proactive, data-driven operations, establishing a solid foundation for predictive maintenance and intelligent asset management in large-scale IoT environments.