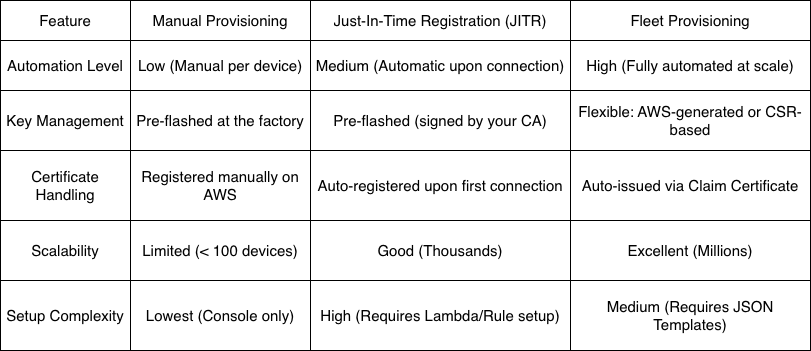

Fleet Provisioning provides a powerful mechanism that simplifies and fully automates the onboarding of devices into AWS IoT. By separating the identity provisioning process from firmware and delegating much of the certificate management responsibility to AWS, Fleet Provisioning allows systems to easily scale up while maintaining high security and efficient device lifecycle management.

Thanks to these features, Fleet Provisioning is particularly well-suited for mass-produced IoT systems, including cellular devices, gateways, and Wi-Fi, where rapid deployment, cost optimization, and long-term operational stability are required. In the context of modern commercial IoT, Fleet Provisioning is not just a technical choice, but a best practice for large-scale AWS IoT systems.

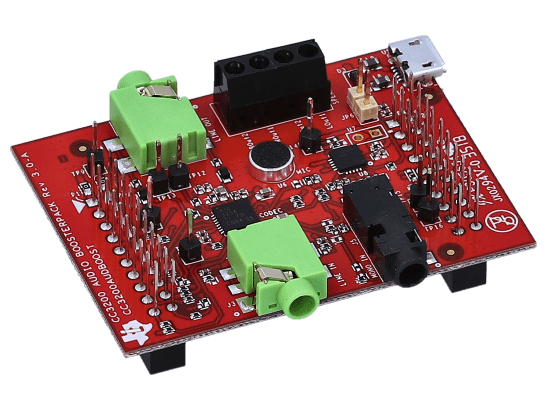

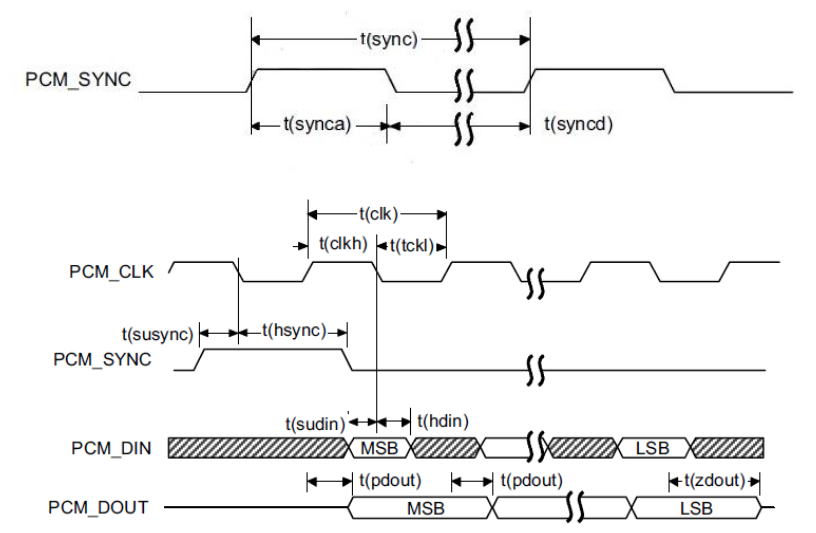

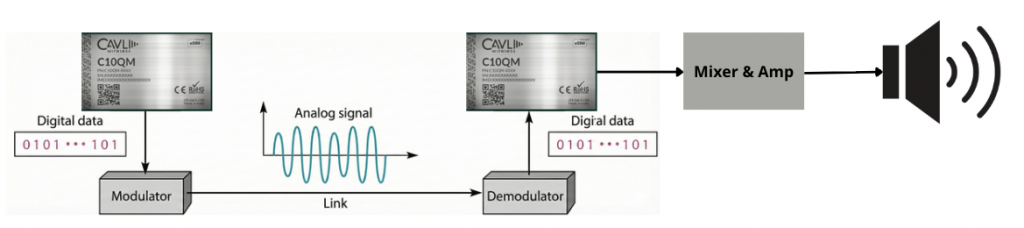

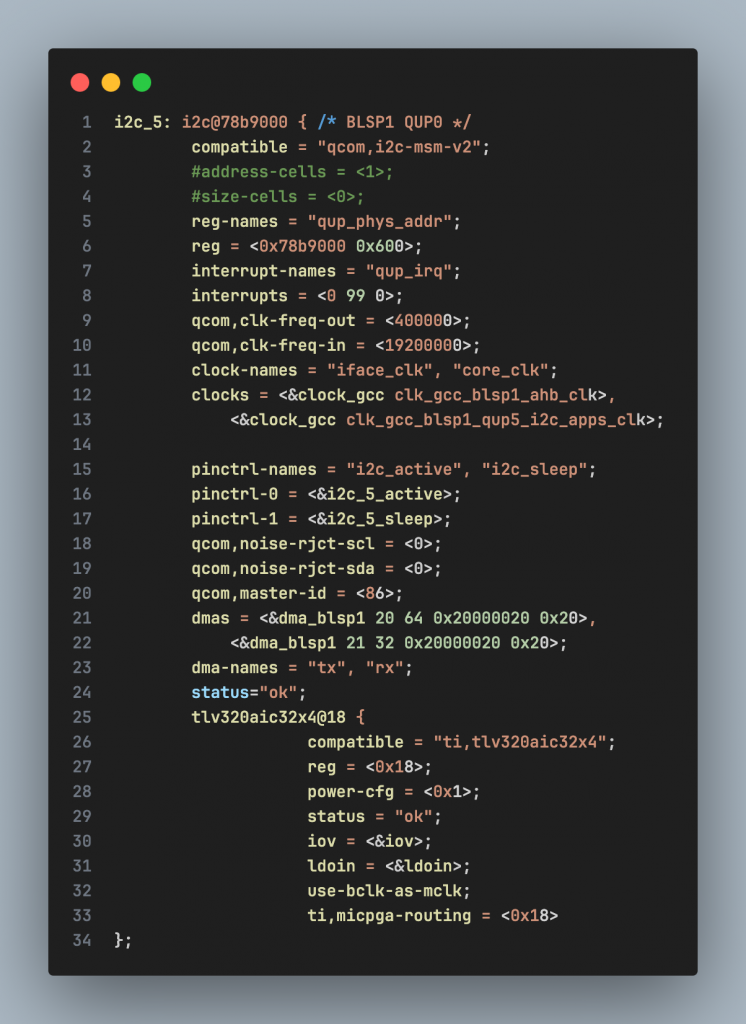

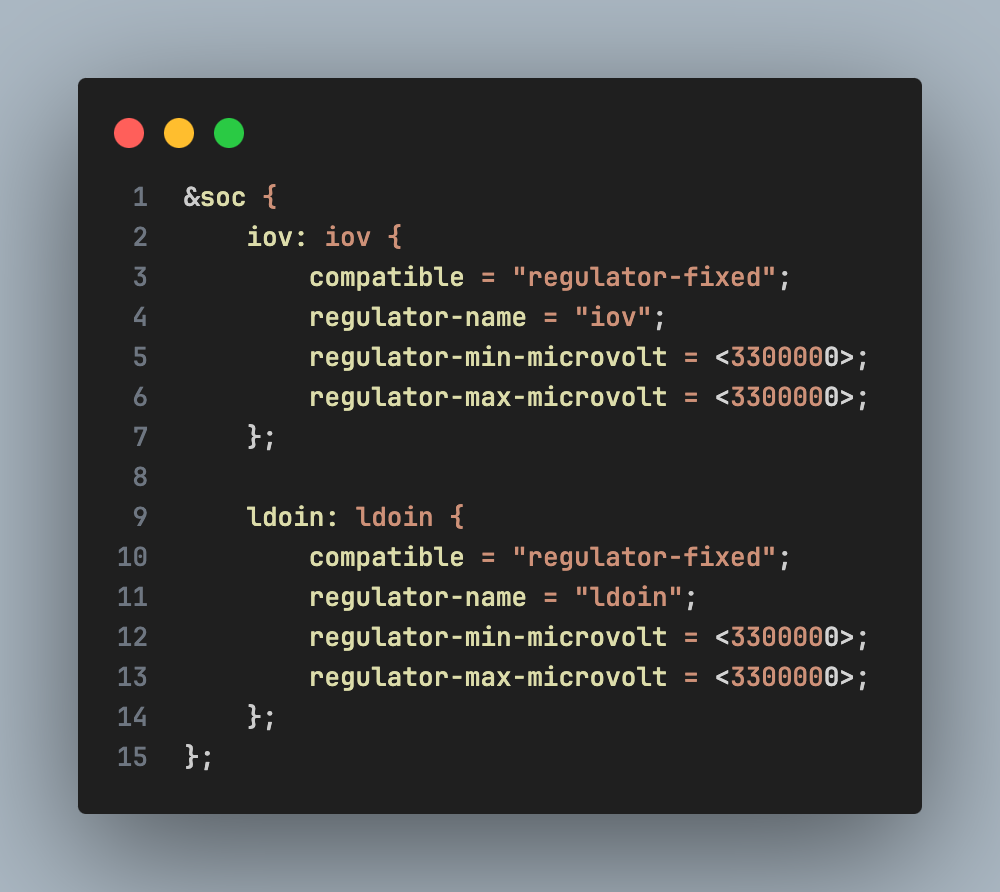

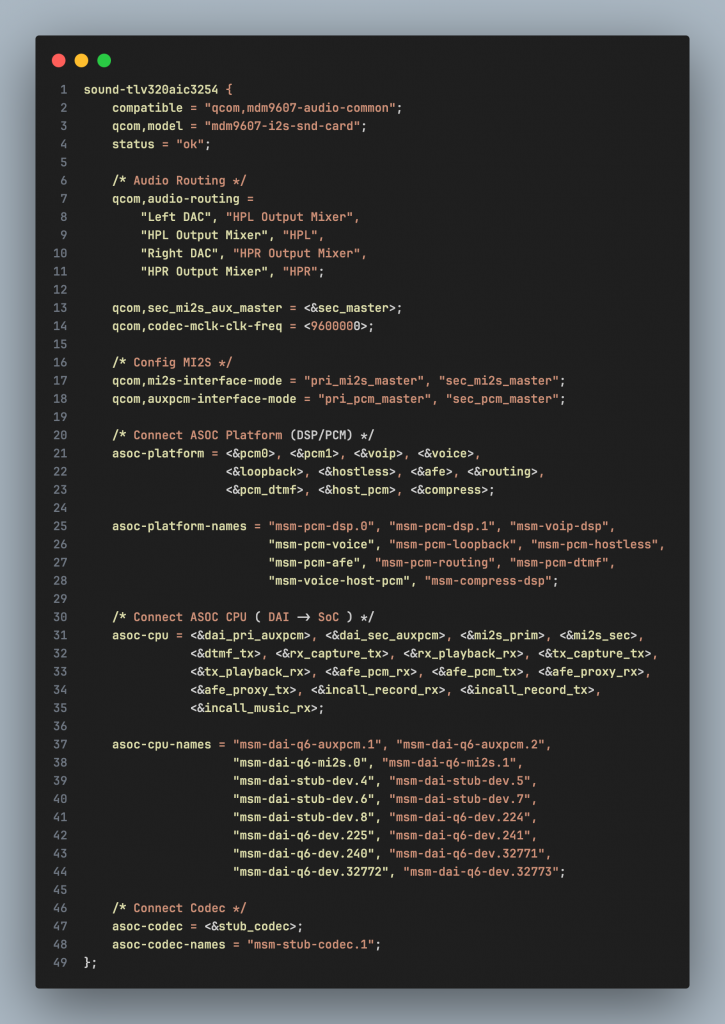

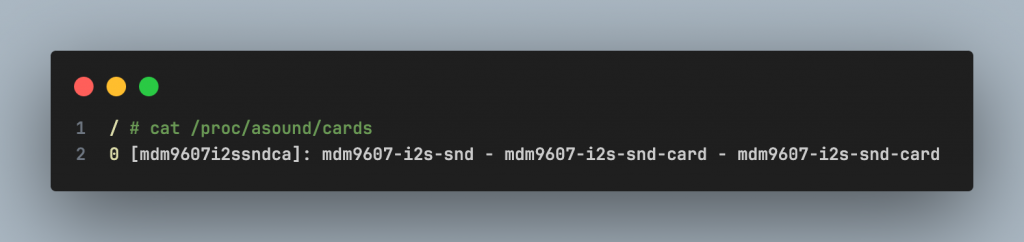

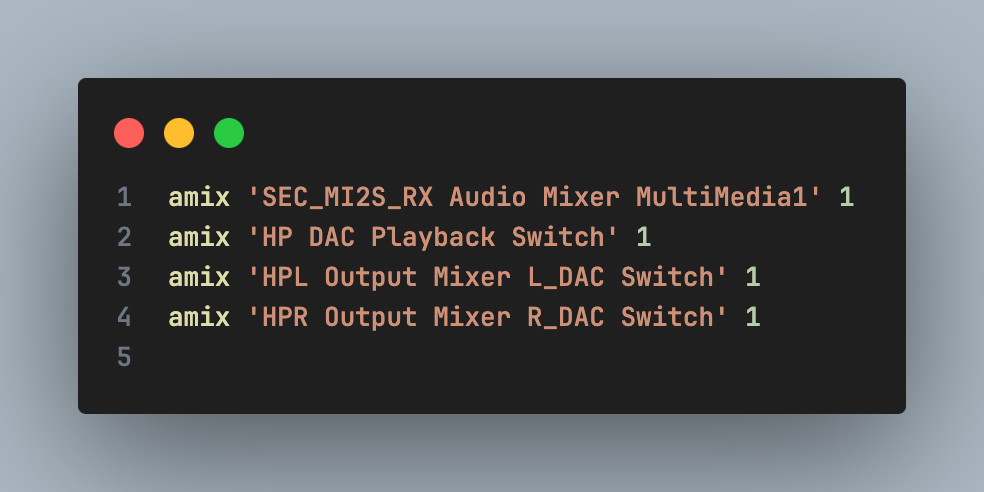

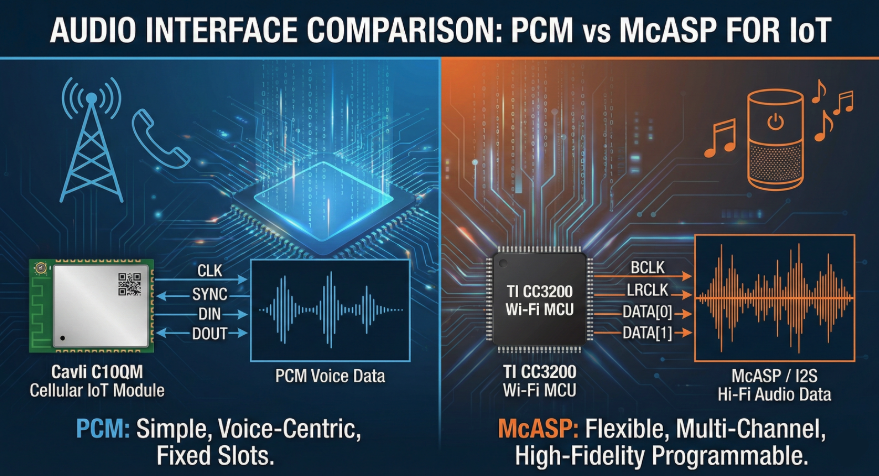

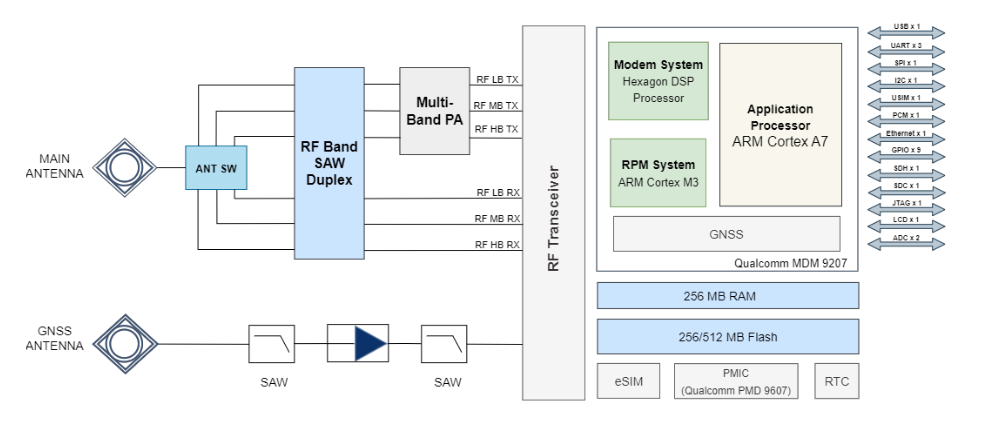

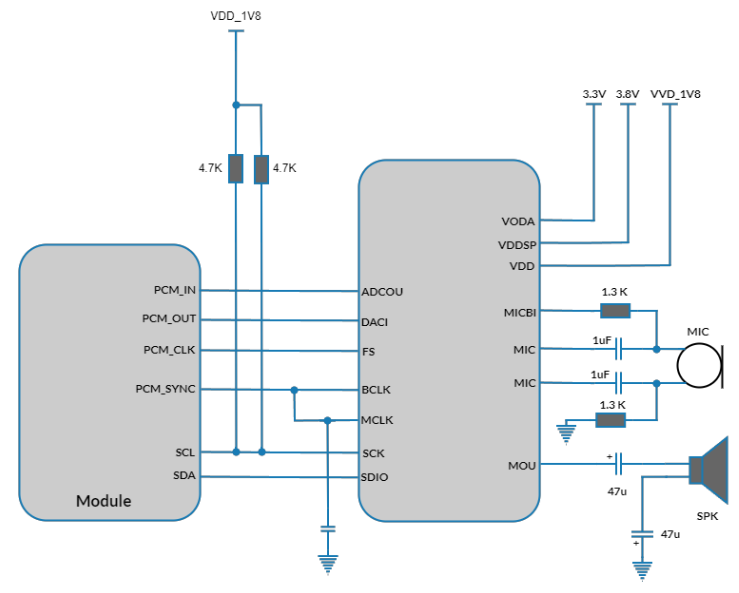

For downlink, audio data travels from the host device to the peripheral device. Specifically, digital PCM data is output by the C10QM module’s CPU via the PCM_DOUT pin (Pin 48). This signal enters the DIN pin of the TLV320AIC3254 codec. Here, the codec processes the signal through an interpolation filter and a digital-to-analog converter (DAC) to convert it into analog signal. The final signal is then passed through the amplifier (Headphone Driver) and output to the 3.5mm jack. Software-wise, the DAPM (Dynamic Audio Power Management) path is routed from the “Left/Right DAC” through the corresponding mixers to the HPL/HPR output.

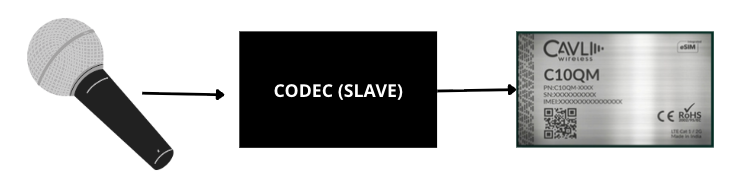

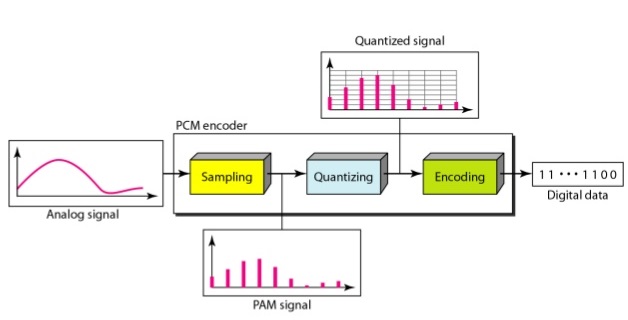

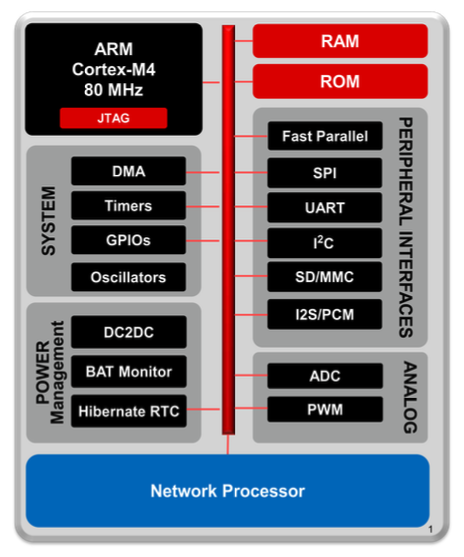

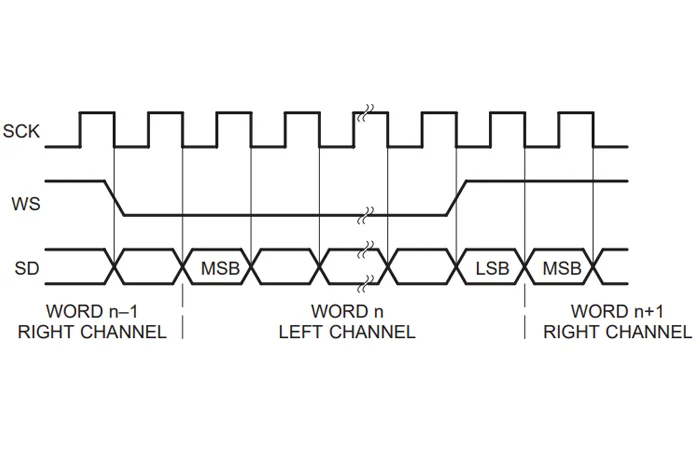

For downlink, audio data travels from the host device to the peripheral device. Specifically, digital PCM data is output by the C10QM module’s CPU via the PCM_DOUT pin (Pin 48). This signal enters the DIN pin of the TLV320AIC3254 codec. Here, the codec processes the signal through an interpolation filter and a digital-to-analog converter (DAC) to convert it into analog signal. The final signal is then passed through the amplifier (Headphone Driver) and output to the 3.5mm jack. Software-wise, the DAPM (Dynamic Audio Power Management) path is routed from the “Left/Right DAC” through the corresponding mixers to the HPL/HPR output. In contrast to the transmission stream, the uplink stream is responsible for bringing the signal from the environment into the processing system. The analog signal from the microphone enters the analog input of the codec, is amplified by the PGA (Programmable Gain Amplifier), and converted to digital via the ADC. The digital data is then output from the DOUT pin of the codec and goes to the PCM_DIN pin (Pin 47) of the C10QM module. At the C10QM side, the CPU receives this data stream to perform recording or processing for voice calls.

In contrast to the transmission stream, the uplink stream is responsible for bringing the signal from the environment into the processing system. The analog signal from the microphone enters the analog input of the codec, is amplified by the PGA (Programmable Gain Amplifier), and converted to digital via the ADC. The digital data is then output from the DOUT pin of the codec and goes to the PCM_DIN pin (Pin 47) of the C10QM module. At the C10QM side, the CPU receives this data stream to perform recording or processing for voice calls.

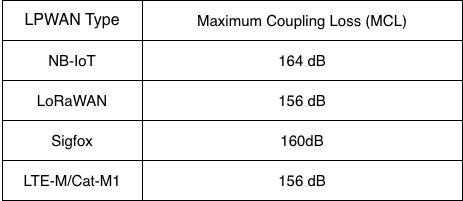

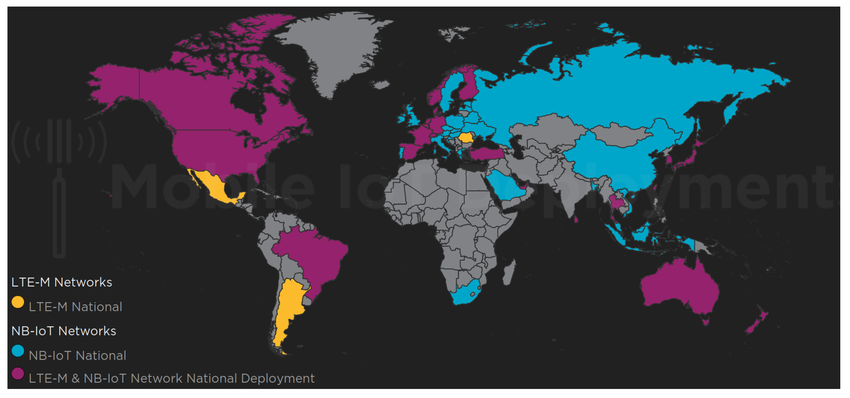

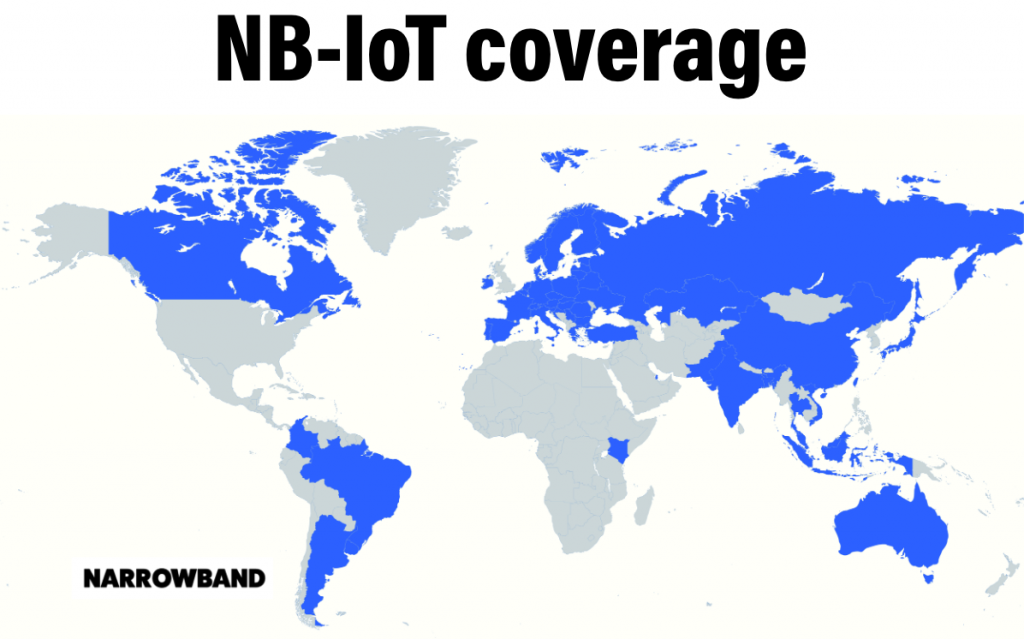

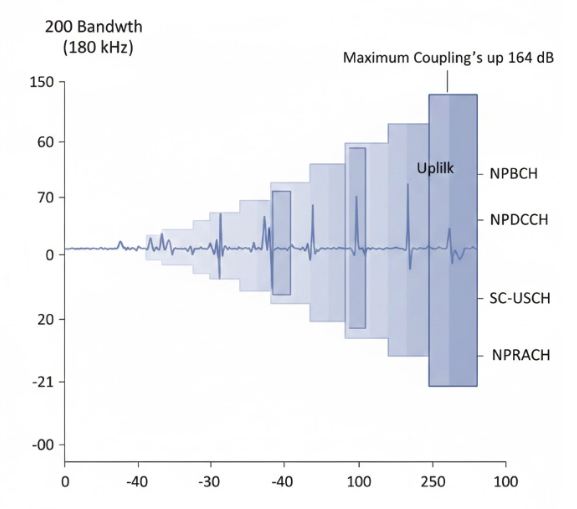

Furthermore, NB-IoT’s coverage is expanded by supporting low but stable uplink power, combined with optimized modulation and encoding for channels with high attenuation. This allows an NB-IoT base station to serve devices at the cell edge or in noisy environments while maintaining reliable communication. As a result, NB-IoT effectively meets the needs of deployment scenarios in hard-to-reach or inconvenient locations for maintenance, contributing to reduced operating costs and increased IoT system lifespan.

Furthermore, NB-IoT’s coverage is expanded by supporting low but stable uplink power, combined with optimized modulation and encoding for channels with high attenuation. This allows an NB-IoT base station to serve devices at the cell edge or in noisy environments while maintaining reliable communication. As a result, NB-IoT effectively meets the needs of deployment scenarios in hard-to-reach or inconvenient locations for maintenance, contributing to reduced operating costs and increased IoT system lifespan.

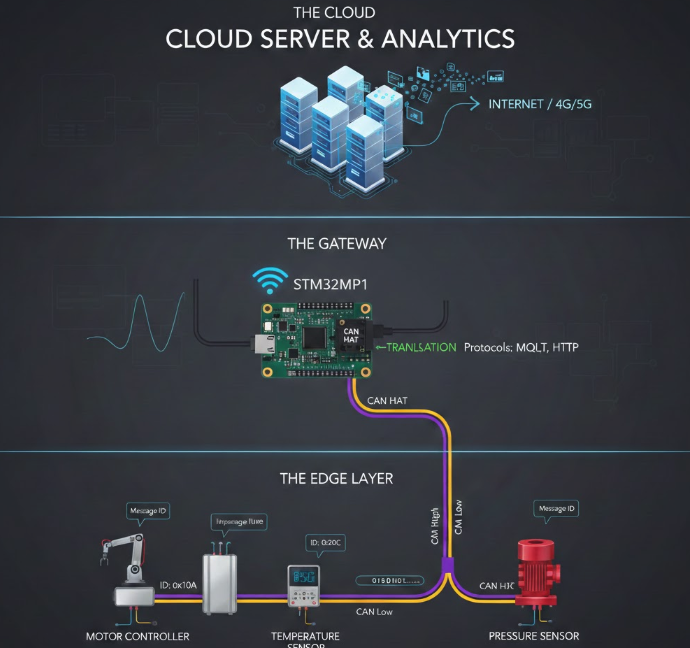

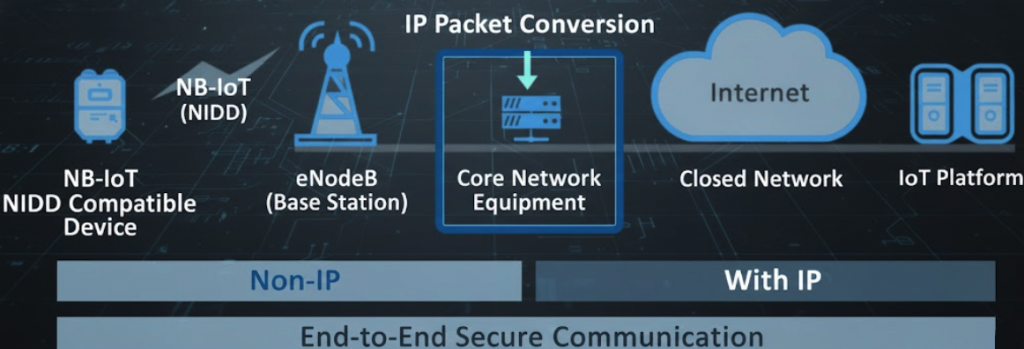

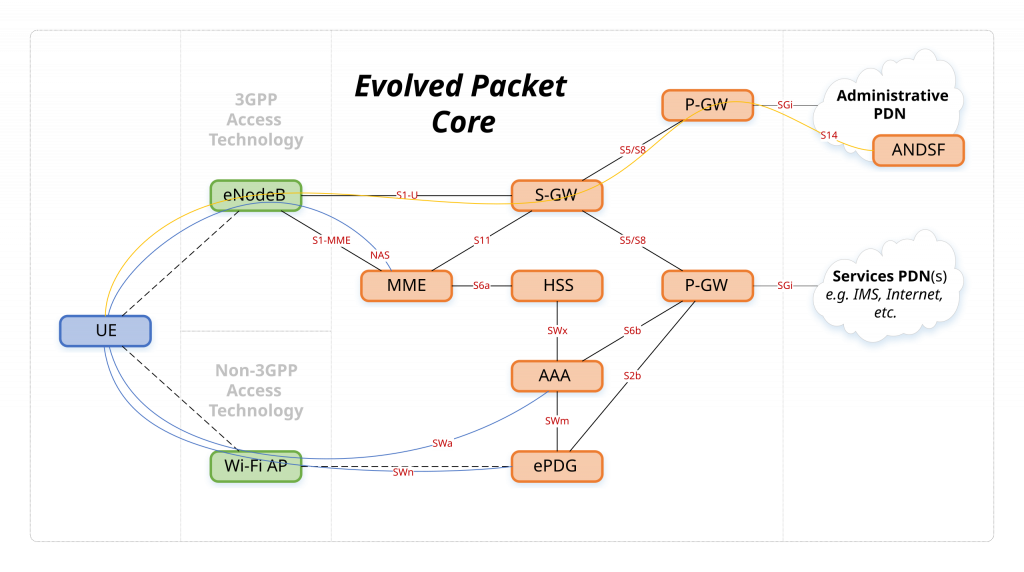

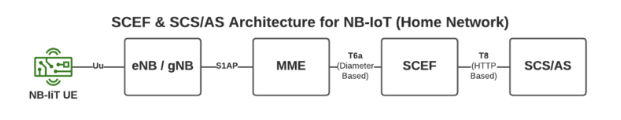

Unlike conventional IP data transmission (via SGW/PGW), this diagram accurately describes the NIDD route, enabling NB-IoT devices to maximize battery life and enhance security by not using public IP addresses. The presence of SCEF (Service Capability Exposure Function) is a core and unique component found only in the Non-IP data transmission route. It acts as a “gateway” to encapsulate raw data from the IoT device into control messages. Transmission via MME (Mobility Management Entity): In this diagram, data travels from eNB/gNB via the S1AP interface to the MME, then to the SCEF via the T6a interface. This route confirms that data is being transmitted on the Control Plane, a characteristic of the NIDD method for energy optimization. Connection to SCS/AS: After passing through the SCEF, data is transferred to the application platform (SCS/AS) via the T8 interface (based on HTTP). This is how devices without IP addresses can still communicate with servers on the Internet.

Unlike conventional IP data transmission (via SGW/PGW), this diagram accurately describes the NIDD route, enabling NB-IoT devices to maximize battery life and enhance security by not using public IP addresses. The presence of SCEF (Service Capability Exposure Function) is a core and unique component found only in the Non-IP data transmission route. It acts as a “gateway” to encapsulate raw data from the IoT device into control messages. Transmission via MME (Mobility Management Entity): In this diagram, data travels from eNB/gNB via the S1AP interface to the MME, then to the SCEF via the T6a interface. This route confirms that data is being transmitted on the Control Plane, a characteristic of the NIDD method for energy optimization. Connection to SCS/AS: After passing through the SCEF, data is transferred to the application platform (SCS/AS) via the T8 interface (based on HTTP). This is how devices without IP addresses can still communicate with servers on the Internet.